Blog Post

A closer look at script discovery using regex

The origins of regular expressions, or regex, can be traced back to the 1950s when they were formalized in mathematical notation for “regular events” by Stephen Cole Keene to describe the workings of the human nervous system.

From 1968, regular expressions became widely used for pattern matching in text editors and for computer analysis, using techniques pioneered by Ken Thompson and Walter Johnson et al. Thompson’s use of regular expressions in the Unix text editor Ed introduced the principles of “grep” (Global search for Regular Expressions and Print matching files).

Alfred Aho expanded their work in the 1970s to support a range of additional functions, known as “extended grep.” POSIX standardized regular expressions in the late 1980s, defining Basic Regular Expressions (BRE) and Extended Regular Expressions (ERE).

Now adopted by a vast range of programs, regular expressions are used for a range of processing tasks, including data discovery.

Data discovery using Regex

Regex uses a standard syntax for matching patterns of characters. In data discovery, it is used as a script-based method of identifying types of data that match a defined pattern.

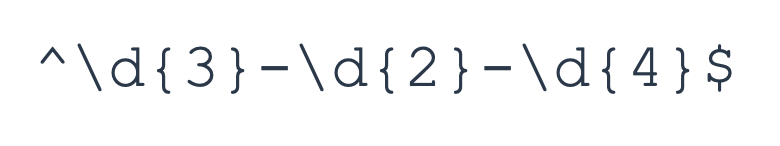

For example, the following regex pattern will match hyphen-separated Social Security Numbers (SSN) that use the format xxx-xx-xxxx, and which comprise only numerical values:

However, this expression has several limitations that may result in a high false-positive rate (identifying values that are not SSNs), and — arguably worse for compliance purposes — a high number of false negatives (missing values that are SSNs). This pattern lacks specificity around some of the rules that apply to valid SSNs. For instance, social security numbers cannot begin with 666 or 000, nor can they contain any value between 900 and 999.

We can define a more specific regex pattern that accounts for these rules, as well as additional rules that also exclude 00 for the second block of digits and 0000 for the final block:

![]()

Despite this, there are still a number of limitations to this script-based method of finding data.

The shortcomings of script-based discovery

When businesses perform a data discovery exercise for the first time, they often use script-based methods to identify potential matches. This approach has many shortcomings that can make it both time-consuming and ineffective:

- Quality — Data discovery using regex is limited by the quality of the regex pattern used to search for data. Any errors or omissions in the regex pattern reduce the quality and reliability of any results.

- Specificity — Regex patterns need to be very specific to limit the number of false positives they generate, as in our SSN examples. However, this need for specificity means that these patterns are unable to identify data that doesn’t exactly match the defined format. In our example, the ^ and $ symbols refer to the start and end of a line. Therefore, these patterns would ignore valid SSNs that formed part of a longer character string, such as in a sentence.

- Validation — Another way to limit false positives is by validating the character types of matched data. The regex examples above do not check for non-numeric characters. This means they would match “123-34-456z” or “a123-34-456,” even though these would not be valid SSNs.

- Variation — Regex patterns are not able to support differences in the way data may be formatted within different systems, due to the specificity aspect of the patterns. For example, SSNs may be stored with or without hyphens, or grouped into two blocks instead of three. Each variation would require a different regex pattern definition.

Super power discovery with context-defined pattern matching

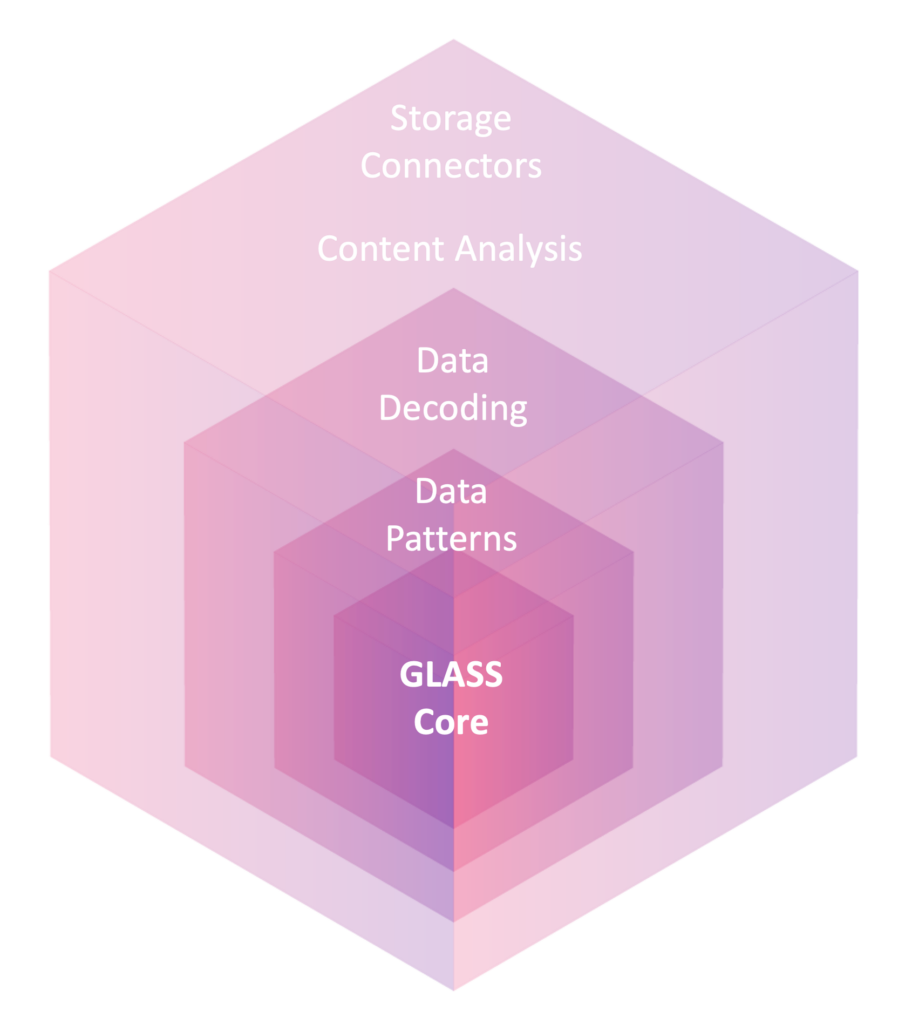

To overcome the shortcomings of regex and similar script-based approaches to data discovery, purpose-built data discovery solutions such as Enterprise Recon deliver context-defined pattern matching built on comprehensive pattern definitions. Our award-winning tool is powered by GLASS Technology™ — a proprietary search syntax that uses contextual data analysis alongside a series of validation methods to ensure accuracy while delivering rapid results.

The use of context-defined pattern matching technology is a game-changer for sensitive data discovery. Including context-based information as part of the discovery algorithm provides additional validation for true positive matches. This approach also supports pattern variation in a way that regex-style script-based searches cannot.

Regex has served us well for over 70 years, but its limitations make it less effective in today’s complex data landscape. The advent of context-defined pattern matching technology, offers a more robust and accurate solution. By considering the context of data in situ and employing multiple validation methods, this technology significantly reduces false positives and accommodates pattern variations. As privacy legislation and cybersecurity regulations become more stringent, businesses must move beyond manual, script-based methods and adopt advanced data discovery solutions.

If you’re ready to see how Enterprise Recon can elevate your discovery capabilities beyond regex, arrange a workshop with one of our experts.