In this article, we’ll explore the mainstream approaches organizations use to search for sensitive data across systems and networks.

We’ll take a brief step back in time to understand the origins of data discovery that underpin the approaches and techniques used today.

As well as explaining several of the real-world discovery methods businesses deploy, we’ll uncover the pros and cons of these approaches and look ahead to the future of data discovery enhanced by artificial intelligence and machine learning.

A brief history of data discovery

“If you want to understand today, you have to search yesterday.” — Pearl Buck

Today, data discovery is a phrase often associated with privacy compliance and data security. These are relatively modern concerns, however; it wasn’t until 1970 that the first privacy law was introduced, by the German state of Hessen. The first national legislation appeared in Sweden in 1973.

NIST, the US cybersecurity authority, was conceived as the “National Bureau of Standards” in 1972 and published security guidelines from the mid 1970s. The information security standards we recognize today were introduced in the late 1990s and early 2000s. BS 7799, later adopted as ISO/IEC 27001, was first published in 1999; PCI DSS v1.0 was released in December 2004.

The broad field of data discovery originated in the 1960s, referred to then as data mining, where statisticians, economists, and financial researchers would analyze data to identify patterns. The practice became more mainstream following the First International Conference on Data Mining and Knowledge Discovery (KDD-95) held in Montreal in 1995 as a field of academic interest and for data visualization and reporting.

The value of data discovery for data security and privacy compliance gained traction with the introduction of information security standards and frameworks such as BS7799 in 1995 (adopted by ISO as ISO17799 before being merged into the ISO27000 series of standards as ISO27001:2005) and PCI DSS in 2004.

Fast forward to 2024, and more than 135 countries worldwide have introduced privacy legislation and regulation, such as the EU General Data Protection Regulation (GDPR), the Australia Privacy Act (APA), the Personal Information Protection and Electronic Documents Act (PIPEDA), and many more – further elevating the importance of data discovery as a fundamental capability for privacy compliance.

Approaches to data discovery

One of the biggest challenges organizations face in managing their data is getting a clear view of the data they have within their business. There are several approaches available to organizations, each with their own pros and cons. To select the most appropriate method, businesses must understand the purpose of the exercise and its objectives.

Data Flow Mapping

Many businesses start the process of data discovery by mapping a data flow diagram. This approach is quick to initiate using existing resources and can achieve basic results in a short period of time.

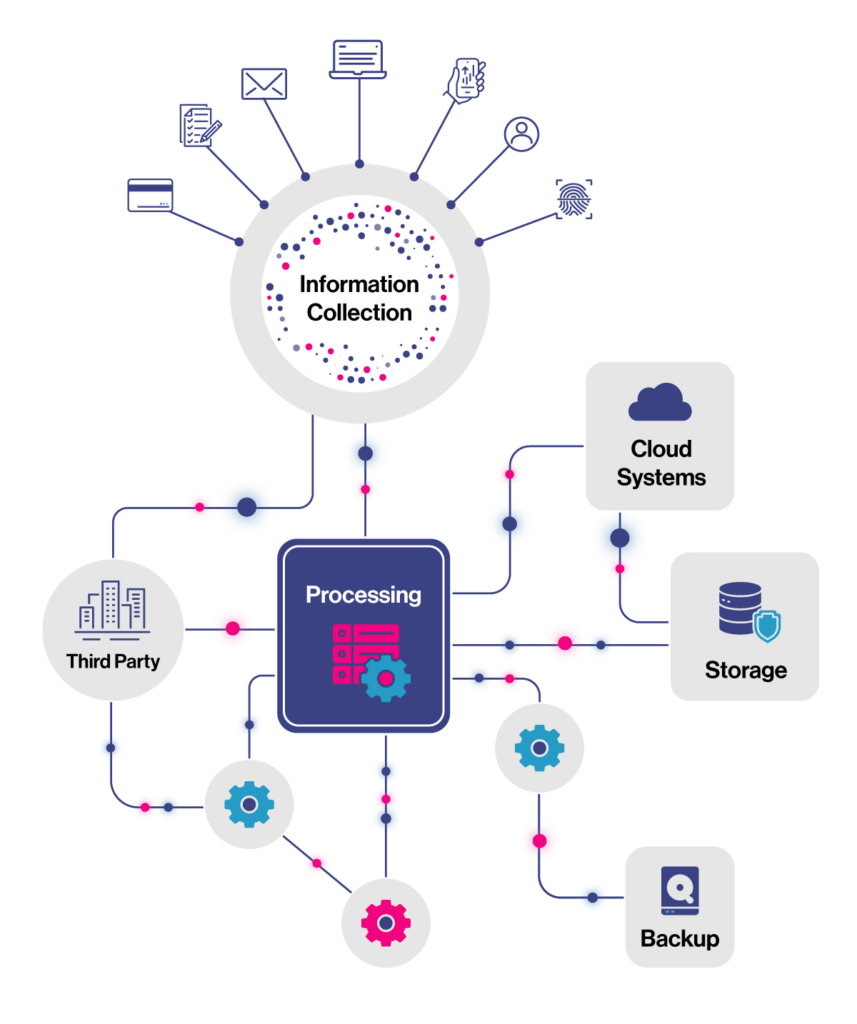

Data flow diagrams are useful for identifying the primary systems processing specific types of data. They illustrate the design of processes and how data is intended to move between systems, teams and suppliers. The quality of data flow mappings depends on the knowledge and expertise of personnel, who can describe how processes and systems interact.

However, these diagrams are typically based on formally engineered processes, and assume that there are no deviations from them.

What data flow diagrams cannot articulate are the many workarounds put in place when a system or process fails, or when well-meaning personnel are put under immense pressure to deliver, or when a colleague, customer or third party does not follow the process.

Data discovery using data flow diagrams cannot identify the data organizations do not know they have, collected through processes they do not know exists — the unknown unknown.

Script-Based Discovery

Another common method businesses use is script-based discovery using regular expressions (“regex”). This may follow a data flow mapping exercise or conducted independently.

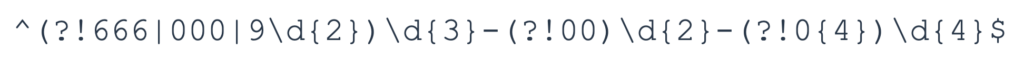

In this approach, regex is used to define character strings representing specific data types.

While there are several resources online offering regex dictionaries for standard data patterns, most larger organizations will have these skills in-house. Because of this, script-based discovery can be a low-cost option for some businesses.

Script-based discovery often generates a high number of false positives, including large amounts of non-matching data in results. More concerningly from a data security perspective, it also has a high false negative rate. This means it is unable to identify true matches, so these are missing from the results.

These issues can make this approach both time-consuming and ineffective, and are caused by several shortcomings:

- Quality — Data discovery using regex is limited by the quality of the regex pattern used to search for data. Any errors or omissions in the regex pattern reduce the quality and reliability of any results.

- Specificity — Regex patterns need to be very specific to limit the number of false positives they generate. However, this need for specificity means that these patterns are unable to identify data that doesn’t exactly match the defined format.

- Validation — Validating the character types of matched data can help reduce false positives. Some types of data validation cannot be incorporated into regex patterns, such as Luhn algorithm checks for debit and credit card numbers.

- Variation — Regex patterns are not able to support differences in the way data may be formatted within different systems, due to the specificity aspect of the patterns. For example, SSNs may be stored with or without hyphens, or grouped into two blocks instead of three. Each variation would require a different regex pattern definition.

Discovery Using Context-Defined Pattern Matching

More advanced data discovery solutions use a combination of methods to identify then validate target data types, achieving a greater degree of accuracy than manual data mapping and script-based discovery.

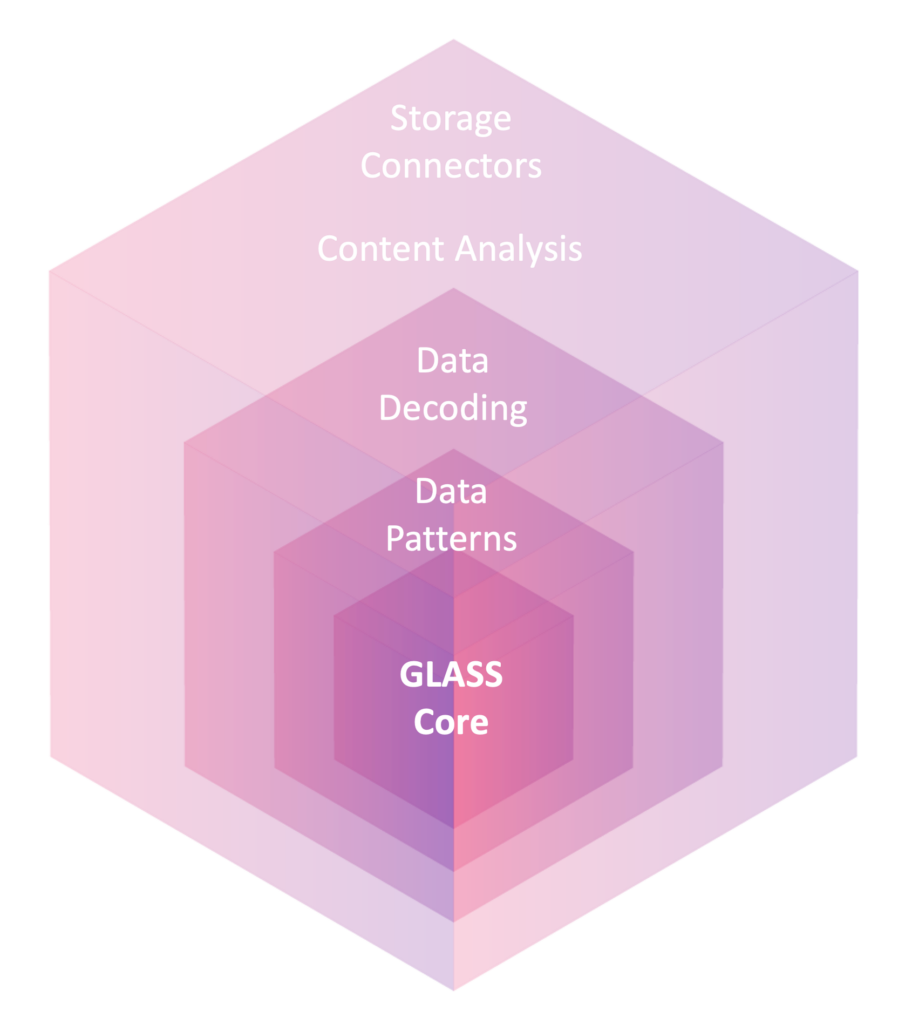

Ground Labs’ Enterprise Recon™ uses a proprietary search syntax to define target data types. The Ground Labs Accurate Search Syntax (GLASS™) technology that underpins the solution allows both more flexibility and greater specificity in its target definitions.

Its custom pattern-matching algorithm is built on a succession of optimized filters that means it disregards irrelevant data early in the match process. However, its biggest differentiator and capability that drives improved performance over other discovery methods is the use of context to improve outcomes.

Most data has information located close to it that provides an indication of what it represents. For example, database fields are labelled with the type of information they are intended to contact, such as name, address, payment information, etc. In unstructured data stores too, similar contextual information is often available to help inform and validate the discovery process. When sharing address details by email, the sender is likely to write something that refers to an address, e.g., “My address is ….” or “Here’s the address for ….”

Data discovery solutions that use context-defined pattern matching techniques combine a script-based pattern search with additional context defined within the search syntax. This helps overcome the limitations of script-based discovery methods by:

- Improving the quality of the definitions used to search for data.

- Supporting greater variation and flexibility within the search syntax that means data can be identified even if it appears in several formats in different locations. It is not as limited by the specificity requirement of regex pattern definition.

- Using contextual information to validate potential matches as well as incorporating validation methods that cannot be incorporated into regex patterns.

Using Artificial Intelligence for Data Discovery

Solutions are appearing on the market utilizing artificial intelligence and machine learning (AI/ML) that claim to deliver more effective data discovery.

While several of these may use cleverly marketed script-based and non-AI technologies in the background, AI/ML-based approaches to data discovery are gaining traction.

AI/ML solutions can assimilate vase quantities of data quickly, and with the right training they can be effective at identifying common data types.

The challenge with these models is that, to be maximally effective, they need to be trained on the specific datasets, types and formats of each organization looking to use them. Without this specific training, such solutions may be less accurate than context-defined pattern matching products.

For example, if training data for a model only presents data types in a specific format, such as 16-digit credit card numbers presented as four blocks of four numerical characters, the AI/ML may be unable to identify the same data presented as a single string of digits.

Many AI/ML models operate as “black boxes,” and it is not always clear how the model derives its outputs. While results may appear accurate initially, errors may emerge with wider use because the model has come to an inaccurate conclusion based on its training input.

The most striking example of this lies in the field of medicine and cancer research. An AI model trained to identify malignancy of skin cancers initially seemed promising, until researchers recognized that its decision-making was based on whether there was a ruler in the image.

Where AI/ML is used to process and analyze personal information, these models must be trained using similar data. Organizations need to be aware of their legal obligations around use of personal data in this way. Most will need to seek additional consent to do so or risk violating local and cross-border privacy legislation.

Another important consideration for AI/ML data discovery solutions, where they are delivered as a service rather than operating wholly within an organization’s internal environment, is how they analyze and evaluate data to identify the target data. If this process requires duplication, transmission and processing of information by a third party, there may be additional privacy and data security concerns.

Optimizing data discovery for the future

In this paper, we’ve highlighted the pros and cons of several data discovery techniques, looking back over the history of discovery from its origins in the data science of the 1970s to its role in privacy compliance and data security today.

Manual data mapping and regex-based discovery methods are becoming obsolete in today’s vast data landscape. Resource intensive and of limited accuracy, these approaches are no longer fit for purpose.

While there are currently many shortcomings with AI/ML-driven data discovery, AI/ML can improve the performance of advanced context-defined pattern matching solutions like Enterprise Recon.

The constraints around training AI/ML using sensitive information such as personal data are not an issue when target data types are defined using a highly performant search syntax like GLASS.

Utilizing AI/ML to analyze, derive and define the contextual information used to validate data matches and to interpret legacy regex-based search patterns can be useful tools in defining custom data patterns for tools such as Enterprise Recon.

To find out how Ground Labs can support your organization and help you get started on your data discovery journey — powered by Enterprise Recon — arrange a workshop with one our experts today!